The Pareto principle (also known as the 80/20 rule, the law of the vital few and the principle of factor sparsity[1][2]) states that for many outcomes, roughly 80% of consequences come from 20% of causes (the "vital few").[1]

In 1941, management consultant Joseph M. Juran developed the concept in the context of quality control and improvement after reading the works of Italian sociologist and economist Vilfredo Pareto, who wrote in 1906 about the 80/20 connection while teaching at the University of Lausanne.[3] In his first work, Cours d'économie politique, Pareto showed that approximately 80% of the land in the Kingdom of Italy was owned by 20% of the population. The Pareto principle is only tangentially related to the Pareto efficiency.

Mathematically, the 80/20 rule is roughly described by a power law distribution (also known as a Pareto distribution) for a particular set of parameters. Many natural phenomena are distributed according to power law statistics.[4] It is an adage of business management that "80% of sales come from 20% of clients."[5]

History edit

In 1941, Joseph M. Juran, a Romanian-born American engineer, came across the work of Italian polymath Vilfredo Pareto. Pareto noted that approximately 80% of Italy's land was owned by 20% of the population.[6][4] Juran applied the observation that 80% of an issue is caused by 20% of the causes to quality issues. Later during his career, Juran preferred to describe this as "the vital few and the useful many" to highlight that the contribution of the remaining 80% should not be discarded entirely.[7]

Mathematical explanation edit

The demonstration of the Pareto principle is explained by a large proportion of process variation being associated with a small proportion of process variables.[2] This is a special case of the wider phenomenon of Pareto distributions. If the Pareto index α, which is one of the parameters characterizing a Pareto distribution, is chosen as α = log45 ≈ 1.16, then one has 80% of effects coming from 20% of causes.[8]

The term 80/20 is only a shorthand for the general principle at work. In individual cases, the distribution could be nearer to 90/10 or 70/30. There is also no need for the two numbers to add up to the number 100, as they are measures of different things. The Pareto principle is an illustration of a "power law" relationship, which also occurs in phenomena such as bush fires and earthquakes.[9] Because it is self-similar over a wide range of magnitudes, it produces outcomes completely different from Normal or Gaussian distribution phenomena. This fact explains the frequent breakdowns of sophisticated financial instruments, which are modeled on the assumption that a Gaussian relationship is appropriate to something like stock price movements.[10]

Gini coefficient and Hoover index edit

Using the "A:B" notation (for example, 0.8:0.2) and with A + B = 1, inequality measures like the Gini index (G) and the Hoover index (H) can be computed. In this case both are the same:

Analysis edit

Pareto analysis is a formal technique useful where many possible courses of action are competing for attention. In essence, the problem-solver estimates the benefit delivered by each action, then selects a number of the most effective actions that deliver a total benefit reasonably close to the maximal possible one.

Pareto analysis is a creative way of looking at causes of problems because it helps stimulate thinking and organize thoughts. However, it can be limited by its exclusion of possibly important problems which may be small initially, but will grow with time. It should be combined with other analytical tools such as failure mode and effects analysis and fault tree analysis for example.[citation needed]

This technique helps to identify the top portion of causes that need to be addressed to resolve the majority of problems. Once the predominant causes are identified, then tools like the Ishikawa diagram or Fish-bone Analysis can be used to identify the root causes of the problems. While it is common to refer to pareto as "80/20" rule, under the assumption that, in all situations, 20% of causes determine 80% of problems, this ratio is merely a convenient rule of thumb and is not, nor should it be considered, an immutable law of nature.

The application of the Pareto analysis in risk management allows management to focus on those risks that have the most impact on the project.[11]

Steps to identify the important causes using 80/20 rule:[12]

- Form a frequency of occurrences as a percentage

- Arrange the rows in decreasing order of importance of the causes (i.e., the most important cause first)

- Add a cumulative percentage column to the table, then plot the information

- Plot (#1) a curve with causes on x- and cumulative percentage on y-axis

- Plot (#2) a bar graph with causes on x- and percent frequency on y-axis

- Draw a horizontal dotted line at 80% from the y-axis to intersect the curve. Then draw a vertical dotted line from the point of intersection to the x-axis. The vertical dotted line separates the important causes (on the left) and trivial causes (on the right)

- Explicitly review the chart to ensure that causes for at least 80% of the problems are captured

Applications edit

Economics edit

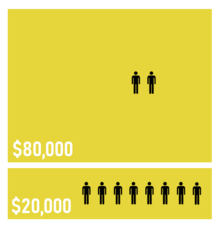

Pareto's observation was in connection with population and wealth. Pareto noticed that approximately 80% of Italy's land was owned by 20% of the population.[6] He then carried out surveys on a variety of other countries and found to his surprise that a similar distribution applied.[citation needed]

A chart that demonstrated the effect appeared in the 1992 United Nations Development Program Report, which showed that the richest 20% of the world's population receives 82.7% of the world's income.[13] However, among nations, the Gini index shows that wealth distributions vary substantially around this norm.[14]

| Quintile of population | Income |

|---|---|

| Richest 20% | 82.70% |

| Second 20% | 11.75% |

| Third 20% | 2.30% |

| Fourth 20% | 1.85% |

| Poorest 20% | 1.40% |

The principle also holds within the tails of the distribution. The physicist Victor Yakovenko of the University of Maryland, College Park and AC Silva analyzed income data from the US Internal Revenue Service from 1983 to 2001 and found that the income distribution of the richest 1–3% of the population also follows Pareto's principle.[16]

In Talent: How to Identify Entrepreneurs, economist Tyler Cowen and entrepreneur Daniel Gross suggest that the Pareto Principle can be applied to the role of the 20% most talented individuals in generating the majority of economic growth.[17] According to the New York Times in 1988, many video rental shops reported that 80% of revenue came from 20% of videotapes (although rarely rented classics such as Gone with the Wind must be stocked to appear to have a good selection).[18]

Computing edit

In computer science the Pareto principle can be applied to optimization efforts.[19] For example, Microsoft noted that by fixing the top 20% of the most-reported bugs, 80% of the related errors and crashes in a given system would be eliminated.[20] Lowell Arthur expressed that "20% of the code has 80% of the errors. Find them, fix them!"[21] It was also discovered that, in general, 80% of a piece of software can be written in 20% of the total allocated time. Conversely, the hardest 20% of the code takes 80% of the time. This factor is usually a part of COCOMO estimating for software coding.[citation needed]

Occupational health and safety edit

Occupational health and safety professionals use the Pareto principle to underline the importance of hazard prioritization. Assuming 20% of the hazards account for 80% of the injuries, and by categorizing hazards, safety professionals can target those 20% of the hazards that cause 80% of the injuries or accidents. Alternatively, if hazards are addressed in random order, a safety professional is more likely to fix one of the 80% of hazards that account only for some fraction of the remaining 20% of injuries.[22]

Aside from ensuring efficient accident prevention practices, the Pareto principle also ensures hazards are addressed in an economical order, because the technique ensures the utilized resources are best used to prevent the most accidents.[23]

Engineering and quality control edit

The Pareto principle is the basis for the Pareto chart, one of the key tools used in total quality control and Six Sigma techniques. The Pareto principle serves as a baseline for ABC-analysis and XYZ-analysis, widely used in logistics and procurement for the purpose of optimizing stock of goods, as well as costs of keeping and replenishing that stock.[24] In engineering control theory, such as for electromechanical energy converters, the 80/20 principle applies to optimization efforts.[19]

The remarkable success of statistically based searches for root causes is based upon a combination of an empirical principle and mathematical logic. The empirical principle is usually known as the Pareto principle.[25] With regard to variation causality, this principle states that there is a non-random distribution of the slopes of the numerous (theoretically infinite) terms in the general equation.

All of the terms are independent of each other by definition. Interdependent factors appear as multiplication terms. The Pareto principle states that the effect of the dominant term is very much greater than the second-largest effect term, which in turn is very much greater than the third, and so on.[26] There is no explanation for this phenomenon; that is why we refer to it as an empirical principle.

The mathematical logic is known as the square-root-of-the-sum-of-the-squares axiom. This states that the variation caused by the steepest slope must be squared, and then the result added to the square of the variation caused by the second-steepest slope, and so on. The total observed variation is then the square root of the total sum of the variation caused by individual slopes squared. This derives from the probability density function for multiple variables or the multivariate distribution (we are treating each term as an independent variable).

The combination of the Pareto principle and the square-root-of-the-sum-of-the-squares axiom means that the strongest term in the general equation totally dominates the observed variation of effect. Thus, the strongest term will dominate the data collected for hypothesis testing.

In the systems science discipline, Joshua M. Epstein and Robert Axtell created an agent-based simulation model called Sugarscape, from a decentralized modeling approach, based on individual behavior rules defined for each agent in the economy. Wealth distribution and Pareto's 80/20 principle emerged in their results, which suggests the principle is a collective consequence of these individual rules.[27]

Health and social outcomes edit

In 2009, the Agency for Healthcare Research and Quality said 20% of patients incurred 80% of healthcare expenses due to chronic conditions.[28] A 2021 analysis showed unequal distribution of healthcare costs, with older patients and those with poorer health incurring more costs.[29] The 20/80 rule has been proposed as a rule of thumb for the infection distribution in superspreading events.[30][31] However, the degree of infectiousness has been found to be distributed continuously in the population.[31] In epidemics with super-spreading, the majority of individuals infect relatively few secondary contacts.

See also edit

- 1% rule – Hypothesis that more people will lurk in a virtual community than will participate

- 10/90 gap – Health statistic

- Ninety–ninety rule – Humorous aphorism in computer programming

- Sturgeon's law – "Ninety percent of everything is crap"

References edit

- ^ a b Bunkley, Nick (March 3, 2008). "Joseph Juran, 103, Pioneer in Quality Control, Dies". The New York Times. Archived from the original on September 6, 2017. Retrieved 25 January 2018.

- ^ a b Box, George E.P.; Meyer, R. Daniel (1986). "An Analysis for Unreplicated Fractional Factorials". Technometrics. 28 (1): 11–18. doi:10.1080/00401706.1986.10488093.

- ^ Pareto, Vilfredo (1896–1897). Cours d'Économie Politique (in two volumes). F. Rouge (Lausanne) & F. Pichon (Paris). Volume 1 Volume 2

- ^ a b Newman, MEJ (2005). "Power laws, Pareto Distributions, and Zipf's law" (PDF). Contemporary Physics. 46 (5): 323–351. arXiv:cond-mat/0412004. Bibcode:2005ConPh..46..323N. doi:10.1080/00107510500052444. S2CID 202719165. Retrieved 10 April 2011.

- ^ Marshall, Perry (2013-10-09). "The 80/20 Rule of Sales: How to Find Your Best Customers". Entrepreneur. Retrieved 2018-01-05.

- ^ a b Pareto, Vilfredo; Page, Alfred N. (1971), Translation of Manuale di economia politica ("Manual of political economy"), A.M. Kelley, ISBN 978-0-678-00881-2

- ^ "Pareto Principle (80/20 Rule) & Pareto Analysis Guide". Juran. 2019-03-12. Retrieved 2021-02-27.

- ^ Dunford (2014), "The Pareto Principle" (PDF), The Plymouth Student Scientist

- ^ Bak, Per (1999), How Nature Works: the science of self-organized criticality, Springer, p. 89, ISBN 0-387-94791-4

- ^ Taleb, Nassim (2007), The Black Swan, pp. 229–252, 274–285

- ^ David Litten, Project Risk and Risk Management, Retrieved May 16, 2010

- ^ "Pareto Analysis". Archived from the original on 8 February 2012. Retrieved 12 January 2012.

- ^ United Nations Development Program (1992), 1992 Human Development Report, New York: Oxford University Press

- ^ Hillebrand, Evan (June 2009). "Poverty, Growth, and Inequality over the Next 50 Years" (PDF). FAO, United Nations – Economic and Social Development Department. Archived from the original (PDF) on 2017-10-20.

- ^ Human Development Report 1992, Chapter 3, retrieved 2007-07-08

- ^ Yakovenko, Victor M.; Silva, A. Christian (2005), Chatterjee, Arnab; Yarlagadda, Sudhakar; Chakrabarti, Bikas K. (eds.), "Two-class Structure of Income Distribution in the USA: Exponential Bulk and Power-law Tail", Econophysics of Wealth Distributions: Econophys-Kolkata I, New Economic Windows, Springer Milan, pp. 15–23, doi:10.1007/88-470-0389-x_2, ISBN 978-88-470-0389-7

- ^ Paris Aéroport, Paris Vous Aime Magazine, No 13, avril-may-juin 2023, p. 71

- ^ Kleinfield, N. R. (1988-05-01). "A Tight Squeeze at Video Stores". The New York Times. ISSN 0362-4331. Archived from the original on 2015-05-25.

- ^ a b Gen, M.; Cheng, R. (2002), Genetic Algorithms and Engineering Optimization, New York: Wiley

- ^ Rooney, Paula (October 3, 2002), Microsoft's CEO: 80–20 Rule Applies To Bugs, Not Just Features, ChannelWeb

- ^ Pressman, Roger S. (2010). Software Engineering: A Practitioner's Approach (7th ed.). Boston, Mass: McGraw-Hill, 2010. ISBN 978-0-07-337597-7.

- ^ Woodcock, Kathryn (2010). Safety Evaluation Techniques. Toronto, ON: Ryerson University. p. 86.

- ^ "Introduction to Risk-based Decision-Making" (PDF). USCG Safety Program. United States Coast Guard. Retrieved 14 January 2012.

- ^ Rushton, Oxley & Croucher (2000), pp. 107–108.

- ^ Juran, Joseph M., Frank M. Gryna, and Richard S. Bingham. Quality control handbook. Vol. 3. New York: McGraw-Hill, 1974.

- ^ Shainin, Richard D. “Strategies for Technical Problem Solving.” 1992, Quality Engineering, 5:3, 433-448

- ^ Epstein, Joshua; Axtell, Robert (1996), Growing Artificial Societies: Social Science from the Bottom-Up, MIT Press, p. 208, ISBN 0-262-55025-3

- ^ Weinberg, Myrl (July 27, 2009). "Myrl Weinberg: In health-care reform, the 20-80 solution". The Providence Journal. Archived from the original on 2009-08-02.

- ^ Sawyer, Bradley; Claxton, Gary. "How do health expenditures vary across the population?". Peterson-Kaiser Health System Tracker. Peterson Center on Healthcare and the Kaiser Family Foundation. Retrieved 13 March 2019.

- ^ Galvani, Alison P.; May, Robert M. (2005). "Epidemiology: Dimensions of superspreading". Nature. 438 (7066): 293–295. Bibcode:2005Natur.438..293G. doi:10.1038/438293a. PMC 7095140. PMID 16292292.

- ^ a b Lloyd-Smith, JO; Schreiber, SJ; Kopp, PE; Getz, WM (2005). "Superspreading and the effect of individual variation on disease emergence". Nature. 438 (7066): 355–359. Bibcode:2005Natur.438..355L. doi:10.1038/nature04153. PMC 7094981. PMID 16292310.

Further reading edit

- Bookstein, Abraham (1990), "Informetric distributions, part I: Unified overview", Journal of the American Society for Information Science, 41 (5): 368–375, doi:10.1002/(SICI)1097-4571(199007)41:5<368::AID-ASI8>3.0.CO;2-C

- Klass, O. S.; Biham, O.; Levy, M.; Malcai, O.; Soloman, S. (2006), "The Forbes 400 and the Pareto wealth distribution", Economics Letters, 90 (2): 290–295, doi:10.1016/j.econlet.2005.08.020

- Koch, R. (2004), Living the 80/20 Way: Work Less, Worry Less, Succeed More, Enjoy More, London: Nicholas Brealey Publishing, ISBN 1-85788-331-4

- Reed, W. J. (2001), "The Pareto, Zipf and other power laws", Economics Letters, 74 (1): 15–19, doi:10.1016/S0165-1765(01)00524-9

- Rosen, K. T.; Resnick, M. (1980), "The size distribution of cities: an examination of the Pareto law and primacy", Journal of Urban Economics, 8 (2): 165–186, doi:10.1016/0094-1190(80)90043-1

- Rushton, A.; Oxley, J.; Croucher, P. (2000), The handbook of logistics and distribution management (2nd ed.), London: Kogan Page, ISBN 978-0-7494-3365-9.

External links edit

- Pareto Principle: Rule of causes and consequences

- ParetoRule.cf : Pareto Rule

- ParetoRule.cf : The Pareto Rule

- About.com: Pareto's Principle

- Wealth Condensation in Pareto Macro-Economies

- Simply Psychology: Pareto Principle (The 80-20 Rule): Examples & More